This was one of the longest running projects that I have had running. Since forever I have tried to get a proper media storage solution working. It first started with when I had the 4 bay DroboProFS. It was good for years but always had some gotcha’s. It was tethered to my MacbookPro permanently and my mac was running Plex Media center, so to watch anything from around the house my laptop had to be on and connected. This worked for years but slowly the drives started getting very full and then started dying.

I actually managed to pickup a much bigger Drobo 12 bay rack mount unit, but the networking was too slow and I couldn’t install plex to it, meaning I would have to run plex off it over the network from my laptop still, so not ideal. I sold the Drobo and made some money, which was enough to look at building a proper home media server. I got a good deal on a 6th Gen i5 rig with 16gb ram and an empty case. At the same time, I picked up a bunch of 1tb drives from my old work for $10ea and loaded the machine with drives.

The basic idea was simple, build a machine that could run plex, store all my media and project files, act as a download server, FTP server and have built in redundancy. Due to my machine not having enough sata ports or raid capability, I opted for getting a couple of 4 port SATA cards and running a software raid on Linux Mint using MDADM. For over a year this worked great, but then as usual I had drive failures.

The Final Form

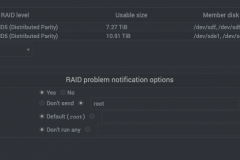

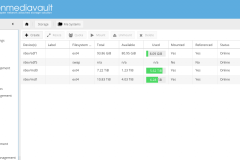

I finally got sick of space limits and drive issues, so I did a case swap and a full re-build. I ended up with 4x2tb drives + 3x4tb drives with two MDADM arrays in RAID5 giving my two arrays with 2 drive failures, surely I would be fine from here on out right? Wrong. I persisted to have issues with drives dropping out of the arrays seemingly at random, and not always the same drives or the same sata ports. After a lot of debugging ( including getting a new 8 port sata board ), it became obvious that the drive failure noise was actually a Seagate HDD “feature”. The little chirping/clicking was actually the drive telling me there was an issue with the power. I so a quick power supply swap with a project that I wasn’t using and it came alive. All drives rebuilt their raid arrays and not drives dropped out anymore.

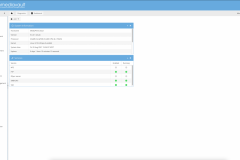

I still had some minor performance issues with the software arrays and I think it is down to using a 8 port sata controller instead of a proper raid card. But because this is mostly a read-only system the write performance wasn’t too much of any issue. I opted for Webmin for my remote management solution as it then meant I could run my server headless downstairs plugged into the network. It gives you all the control to monitor the system, manage the file shares and FTP servers as well as other stuff.

Something to look at doing is upgrading MDADM to 4.1 by source as it is much better at detecting broken arrays and rebuilding them. Some useful MDADM commands and fstab lines are below. You need to force build broken arrays and mount the filesystem with the correct parameters on boot to be able to write to it. If you have a failed array on boot, you will need to comment out that fstab line to be able to boot and rebuild the array, a process I was very familiar with by this point.

- sudo mdadm –verbose –assemble –force –run /dev/md0 /dev/sdf /dev/sdg /dev/sdh /dev/sdi /dev/sdj

- /dev/md1 /mnt/archive ext4 rw,user,exec,x-gvfs-show,x-gvfs-name=Archive 0 0

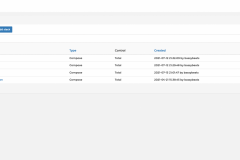

The final build has the following setup on it:

- i5 6500 CPU

- 16GB DDR4 ram

- 4x 2TB Seagate Drives

- 3x 4TB Seagate Drives

- 1x 8 port PCI-E Sata expansion board

- Fractal Design R2 case

- 3x Fractal design case fans

- CryoRig CPU cooler

- Zalman GV Series 600w Power Supply ( this will get replaced with a quieter unit one day

A slight update

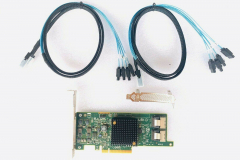

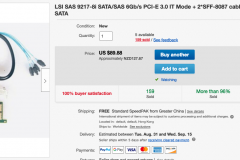

So I got sick of drives falling out of the raid and the cheap SATA controllers dying so I finally invested in a good LSI card to get it sorted once and for all. Its an LSI SAS 9217-8i controller custom flashed to IT mode. This is a PCIe 4x card as well so I know I wont run into issues with bottle necks ( not that my raid5 would bottle neck ).

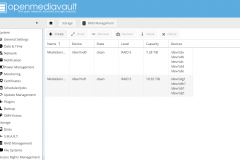

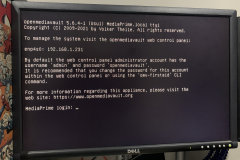

Open Media Vault 5

As well as the new LSI card I also was getting sick of managing the MDADM array manually through Webmin and its Linux Raid interface. I did some digging and noticed that Open Media Vault also using MDADM for its raid arrays and flashed a USB with it and saw that it recognised the array. So it was time to abandon Linux Mint and move over to a proper NAS distro. It wasn’t without its learning curve though as there is no desktop at all, so its all command line and web based. I had an issue getting it to display the IPv4 address on first boot and had to change over its network interface as well as set a reserved IP on the router to get to it.

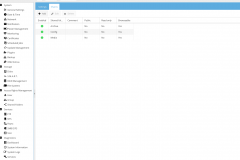

For most of the setup I just followed DB Tech’s tutorials to get it setup. I ended up with the following setup:

- Open Media Vault 5

- 2x Raid 5 arrays

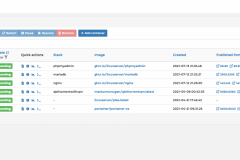

- Docker and Portainer installed

- QBitTorrent + PIA VPN

- Plex

- Nginx + MariaDB + PHPMyAdmin

Transcoding

My last hurdle was getting hardware transcoding working. AMD has no hardware acceleration under Linux for Plex so you are stuck with Nvidia. I opted for the GTX1050ti as it supports 3 streams at 1080p at the same time, needs no PCIe power and was affordable. Getting the Nvidia driver installed under Open Media Vault 5 was no easy task and involved getting the driver loaded into Docker to be able to use it in Plex. I also loaded up NVTop in the command line so I could verify that it was using the GPU for transcoding when I was streaming and that it was indeed allowing it to do multiple streams at the same time.

0 Comments